When working with AI coding agents, context is expensive real estate. iOS build commands like xcodebuild and swift test generate thousands of lines of verbose output that floods your context window.

The Cost of Verbose Build Output

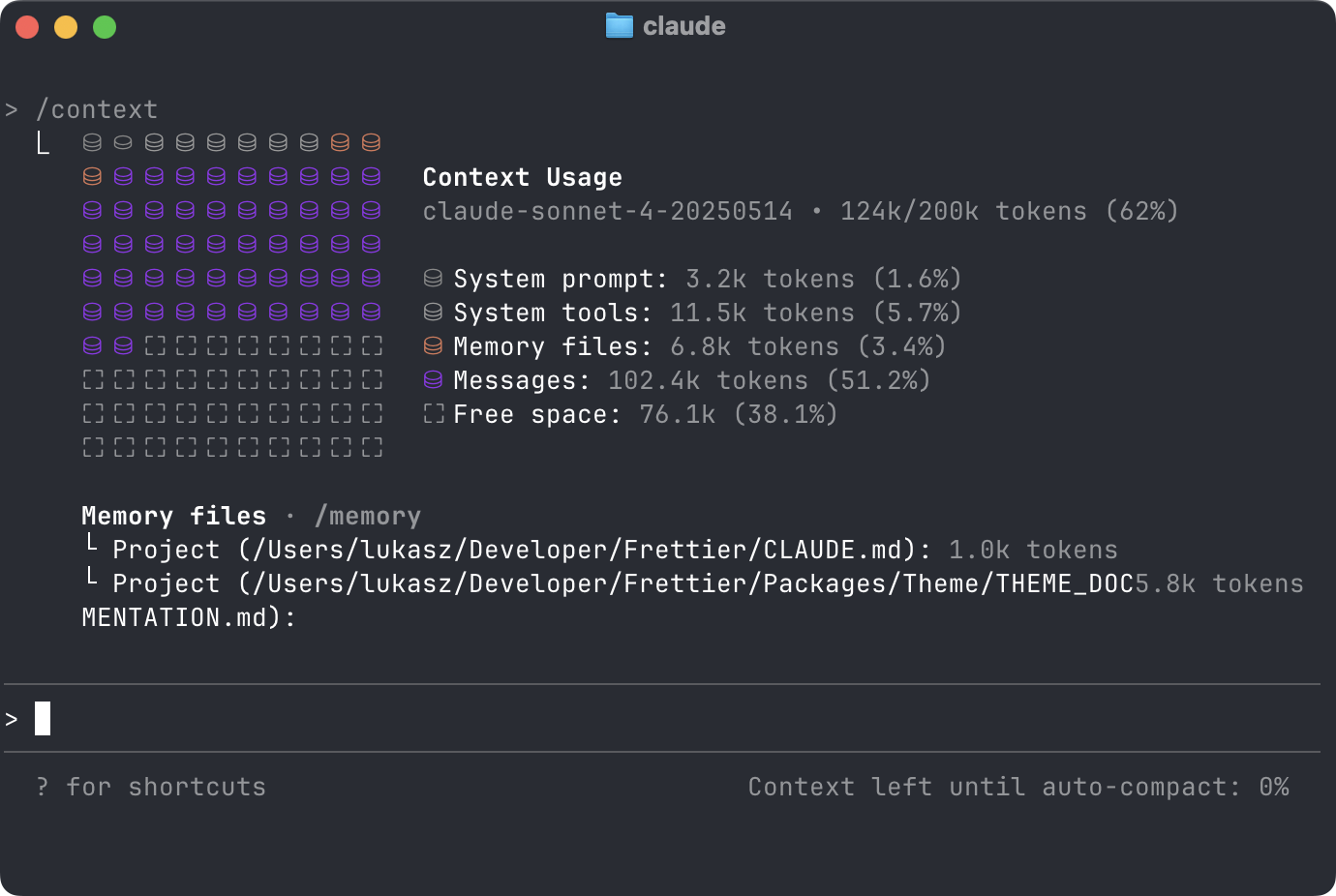

When working with AI coding agents, every tool call, file read, and command execution eats into your available tokens. A typical Claude Code session might look like:

See that Messages usage? That's where your tool output resides

Notice how build output can dominate your context? That’s where the problem starts.

Build Output Problem

Working on iOS projects, xcodebuild and Swift Package Manager commands are incredibly verbose. A simple swift test can dump hundreds of lines about compilation steps, dependency resolution, and build progress. Humans can skim and filter this naturally. AI agents read every single token.

As your project grows, the output grows too. Small projects might generate a few hundred lines. Larger codebases with multiple targets and dependencies can easily produce thousands of lines per build. The bigger your project, the more context gets wasted on build noise.

Consider this typical xcodebuild output:

[0/1] Planning build

Building for debugging...

[2/13] Compiling _SwiftSyntaxCShims dummy.c

[3/13] Write swift-version--18F169818595AF5B.txt

[4/11] Compiling _TestingInternals Versions.cpp

...

[45/91] Compiling SwiftSyntax CommonAncestor.swift

[46/91] Compiling SwiftSyntax Assert.swift

...

Test Suite 'All tests' started at 2025-01-15 10:30:45.123

Test Suite 'xcsiftTests.xctest' started at 2025-01-15 10:30:45.124

Test Case '-[xcsiftTests.OutputParserTests testFirstFailingTest]' started.

/Users/lukasz/Developer/xcsift/Tests/OutputParserTests.swift:116: error: -[xcsiftTests.OutputParserTests testFirstFailingTest] : XCTAssertEqual failed: ("expected") is not equal to ("actual")

Test Case '-[xcsiftTests.OutputParserTests testFirstFailingTest]' failed (0.001 seconds).

...

An AI agent needs to parse through this entire output just to understand that one test failed. The signal-to-noise ratio is terrible.

Sample output from running GRDB unit tests

What About Existing Tools?

The Apple (iOS and MacOS) community has great formatting tools like xcpretty and xcbeautify. They make build output beautiful for humans with colors, progress bars, and clean formatting. But they’re still verbose because they target human readers.

Even their “quiet” modes produce lots of output. When all you need is “did the tests pass?”, getting 50 lines of formatted text is still too much.

Eating My Own Dog Food

I needed something different. Not prettier output, but minimal output. So I built xcsift to transform iOS build noise into structured JSON that AI agents can actually use.

That massive test failure output becomes:

{

"status": "failed",

"summary": {

"errors": 0,

"failed_tests": 1,

"build_time": "0.082"

},

"failed_tests": [

{

"test": "xcsiftTests.OutputParserTests testFirstFailingTest",

"message": "XCTAssertEqual failed: (\"expected\") is not equal to (\"actual\")",

"file": "/Users/lukasz/Developer/xcsift/Tests/OutputParserTests.swift",

"line": 116

}

]

}

Done. The agent knows everything it needs: status, what failed, where to look, how long it took.

How It Works

xcsift is just smart regex parsing using Swift’s RegexBuilder. It looks for patterns like file paths, line numbers, and error messages, then structures them into JSON. Nothing fancy, but effective.

It handles both XCTest and Swift Testing output formats, deduplicates similar failures, and extracts exact source locations for quick navigation.

The Difference

Before: swift test dumps 2000+ lines into your context window.

After: swift test | xcsift gives you 15 lines of structured data.

Successful builds are even better:

{

"status": "success",

"summary": {

"errors": 0,

"failed_tests": 0

}

}

That’s it. Success in 6 lines.

Building for Agents, Not Humans

When building tools for AI agents, optimize for machine readability rather than human readability.

This applies everywhere:

Log parsers should extract key metrics, not pretty-print everything

API responses should be minimal and structured

Error messages should be machine-parseable first

I built xcsift using Claude Code itself. There’s something satisfying about using AI agents to build better tools for AI agents. It’s a feedback loop that works.

Using xcsift

xcsift is open source, MIT licensed, and available via Homebrew:

brew tap ldomaradzki/xcsift

brew install xcsift

Pipe any iOS build command through it:

swift test 2>&1 | xcsift

xcodebuild test -scheme MyApp 2>&1 | xcsift

swift build 2>&1 | xcsift

It reads from stdin, outputs JSON to stdout. Simple. The code is straightforward Swift with good regex patterns—check it out on GitHub if you want to contribute or adapt it for other build tools.

Context is currency. Spend it wisely.